Gesture Control: My Slippery Savior

Gesture Control: My Slippery Savior

Rain lashed against my windshield like angry nails as I white-knuckled the steering wheel through Friday rush hour. My playlist's jarring shift from calming jazz to death metal coincided with a curve slick with oil – fingers fumbling toward the phone felt like gambling with my life. That's when I remembered the impulsive midnight download: an app promising control through air gestures. Skepticism warred with desperation as I raised a trembling hand and sliced left through the humid car air.

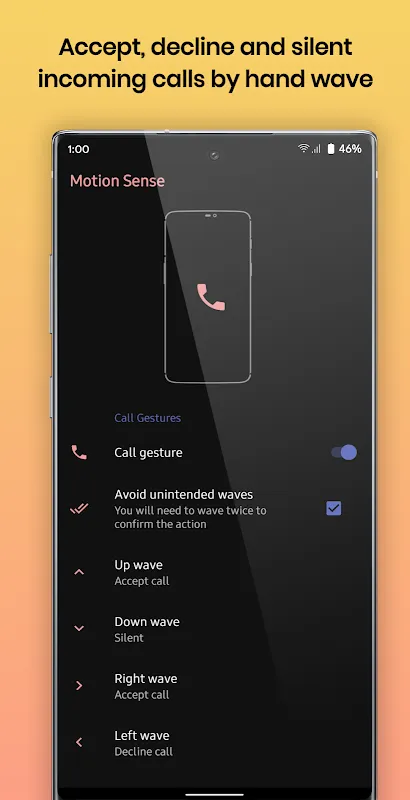

Silence. Then the sweet piano notes returned. My breath escaped in a shudder I hadn't realized I'd trapped. This wasn't just convenience; it felt like cheating death. The app – let's call it Wave Command – used something deeper than basic motion detection. Later, digging into developer notes, I learned it fused gyroscope data with machine learning algorithms trained on erratic real-world movements. It didn't just see gestures; it anticipated them through vibration patterns and subtle wrist angles, filtering out bumps or sudden stops. Pure sorcery for someone who once caused a three-car pileup reaching for a volume button.

At home, victory turned to humiliation. Covered in flour and egg during a baking disaster, my phone rang. A dismissive flick of my sticky wrist – nothing. Three furious waves later, voicemail swallowed my client's call. Rage simmered as I scrubbed dough from the screen. Why did it work flawlessly at 70mph but fail in a stationary kitchen? The betrayal stung worse than lemon juice in a paper cut. Turns out, overhead LED lights created interference patterns that confused the sensors – a flaw never mentioned in the shiny app description. I cursed its name into a tea towel.

Yet next morning, repairing antique furniture, epoxy coated my hands like toxic gloves. When the delivery notification chimed, instinct took over: palm raised, fingers closing slowly. The gate unlock confirmation flashed. No ruined phone, no sticky keypad. That precise moment – chemicals drying on my skin, sunlight catching dust motes, the soft click of the gate – imprinted itself. The Cost of Convenience wasn't just subscription fees; it was trusting invisible algorithms with critical tasks. One misfire could mean a missed ambulance call or a wrecked car. The weight of that dependency settled cold in my stomach even as relief warmed my palms.

Driving through fog so thick it felt suffocating, I tested its limits. A sharp downward chop skipped tracks reliably. Circling two fingers clockwise increased volume incrementally – a nuance requiring predictive calibration that adjusted for my tremor on caffeine-heavy mornings. But asking it to decline a call with a clenched fist? Disaster. My furious gesture answered the telemarketer, blasting his pitch through speakers at deafening volume. I screamed profanities he definitely heard. For all its brilliance in recognizing swipes, interpreting complex gestures remained clunky guesswork. The embarrassment burned hotter than the coffee I spilled lunging for the cancel button.

Now, I move through chaotic days with odd, subtle gestures – a conductor without an orchestra. Waving at my phone on the gym treadmill looks insane, but pausing podcasts mid-sprint without breaking stride feels revolutionary. Yet every interaction carries low-grade anxiety. Will it read my haste as a command or dismiss it as vibration? That tension between liberation and vulnerability defines this tech – freeing my hands but shackling my trust to lines of code. When it works? Magic. When it fails? You're just a sweaty fool waving desperately at glass.

Keywords:Motion Sense,news,gesture technology,driving safety,sensor limitations