My AI-Powered Skin Guardian

My AI-Powered Skin Guardian

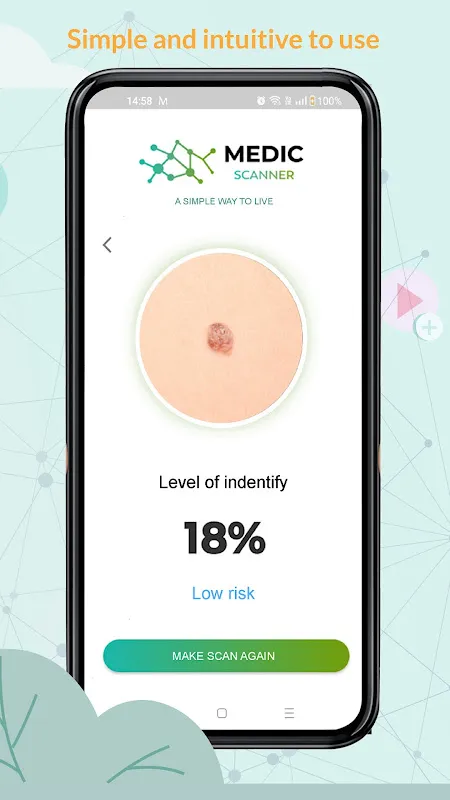

That Tuesday morning steam still clung to the shower tiles when my fingers brushed against it—a raised, asymmetrical intruder just below my collarbone. My breath hitched mid-lather. Grandpa’s funeral flashed before me: the hushed whispers of "melanoma," the coffin’s polished wood gleaming under church lights. I scrambled out, dripping, and pressed my phone’s cold screen against the alien shape. Medic Scanner’s interface blinked awake, its clinical blue tones a stark contrast to my trembling hands. The app demanded stillness I didn’t possess; my pulse drummed against the lens like a trapped bird.

When the analysis circle completed its spin, the result blazed red: "High-risk features detected. Consult dermatologist within 48 hours." I laughed—a jagged, humorless sound—because the convolutional neural networks analyzing my skin had just mirrored my deepest terror. Later, hunched over hospital paperwork, I’d learn how those algorithms devoured millions of dermoscopic images, training on pixel patterns invisible to untrained eyes. Yet in that moment, all I felt was the app’s brutal efficiency slicing through denial like a scalpel.

Three days later, in a sterile exam room, the dermatologist’s magnifier hovered over Medic Scanner’s evidence. "Impressive," she murmured, tracing the mole’s irregular borders on her tablet alongside the app’s heatmap overlay. "Your little AI assistant nailed it." Her praise stung more than the biopsy punch. Because while Medic Scanner’s ensemble learning model—combining texture analysis and color clustering—had flagged the danger, it couldn’t replicate her fingertips assessing the lesion’s firmness, or her seeing my grandmother’s eyes in mine. The app quantified risk; she quantified hope.

False alarms became my unwanted ritual. One evening, Medic Scanner declared a sunspot on my forearm "suspicious" after beach day. The notification vibrated during dinner, murdering my appetite. For hours, I obsessed over its zoomable high-res image, comparing shadow gradients until dawn streaked the windows. When the dermatologist dismissed it as benign, I wanted to hurl my phone into the ocean. Yet next morning, I scanned again—because the app’s cruel genius lies in how its YOLOv5 architecture spots minuscule changes I’d miss. Each alert felt like betrayal; each all-clear, a temporary pardon.

Rain lashed against the clinic window when biopsy results arrived. "Precancerous," the report read. Not the death sentence I’d feared, but a warning shot across the bow. As the nurse prepped the excision site, I opened Medic Scanner’s history gallery. There it was: the mole’s evolution from "low risk" six months prior to its final crimson diagnosis. The app’s timeline feature—leveraging temporal analysis algorithms—had silently documented the rebellion in my cells. I deleted the image post-surgery, a digital burial for a lesion that almost stole my future.

Now I scan religiously, monthly rituals more punctual than my mortgage payments. Medic Scanner’s camera flash illuminates my skin like a forensic spotlight, its machine learning now intimate with every freckle and scar. Sometimes I rage at its limitations—how it misreads angiomas as threats, or fails in dim lighting. But when it detected a changing nevus on my partner’s back last month, I kissed its flawed, life-saving interface. Our bathroom mirror holds two reflections now: human fear and algorithmic vigilance, locked in their uneasy dance against the darkness we carry in our skin.

Keywords:Medic Scanner,news,AI dermatology,skin cancer detection,mole tracking