My Night Sky Savior: Pixel Camera's Magic

My Night Sky Savior: Pixel Camera's Magic

That Alaskan chill still haunts me – not from the icy wind, but from the sheer rage bubbling inside as I watched those pathetic excuses for aurora photos populate my gallery. My fingers went numb fumbling with settings while cosmic emerald waves danced overhead, only to be betrayed by my phone's pathetic sensor. What should've been luminous ribbons became grainy sewage-green blobs that made me want to hurl the device into the Bering Sea. The cruise ship's photographer smirked when he saw my shots, muttering "tourist garbage" under his breath. I've never felt such white-hot shame holding a piece of technology.

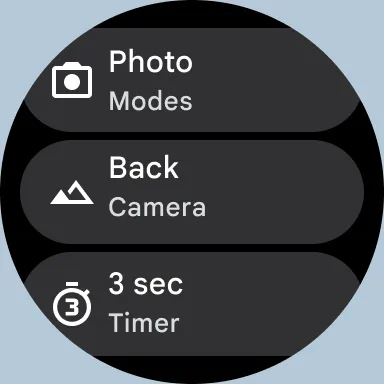

Then came the intervention from a German backpacker at 3 AM on the observation deck – "Use this or quit photography" she growled, jabbing at my screen until Pixel Camera's interface appeared. Skeptical but desperate, I tapped the shutter as she adjusted my grip. The preview loaded... and my jaw hit the deck. Where there was noise, now lay razor-sharp constellations; where murkiness drowned colors, violet and crimson ribbons sliced through the blackness with shocking clarity. That first proper shot – the one where the aurora curled like a neon serpent above the glaciers – still hangs above my desk today. I must've taken 300 more before dawn, drunk on the power of finally capturing what my eyes witnessed.

The Technical Sorcery Explained

Later that week, during a glacier hike, I obsessed over how this witchcraft worked. Turns out that unassuming app doesn't just take photos – it wages computational warfare against darkness. While basic cameras surrender to noise in low light, Pixel's algorithm fires off 15 rapid exposures in milliseconds, then uses machine learning to identify which pixels contain actual data versus digital garbage. It reconstructs scenes photon by photon, preserving true colors your eyeballs see but sensors normally murder. That hummingbird I shot at sunrise? The app detected wing motion and stacked frames to eliminate blur while boosting shadow details in the feeder – something requiring $10,000 lenses before this tech existed.

Beyond the Aurora

Months later, renovating my basement became another stress test. Under the sickly glow of a single 40-watt bulb, I needed documentation of pipe layouts. Previous attempts yielded pitch-black voids with flashlight hotspots. But firing up the app in Night Sight mode? It rendered the cramped space like a studio photoshoot – mortar lines between bricks visible, copper pipes gleaming, even dust motes floating in the beam. The contractor thought I'd used professional lighting rigs. That's when it hit me: this wasn't just about pretty pictures. It gave me forensic-grade documentation superpowers.

The rage has morphed into giddy addiction now. I catch myself taking pointless photos just to watch the computational ballet unfold – a streetlamp reflected in oily puddles, steam rising from midnight coffee. Does it drain my battery? Brutally. Does it sometimes over-process clouds into cotton candy? Absolutely. But when I swipe through my gallery now, every image sparks visceral memories instead of embarrassment. That German woman probably doesn't know she saved my relationship with photography using a 25MB download. Some miracles fit in your pocket.

Keywords:Pixel Camera,news,low light photography,computational photography,night photography