Zenduty: The Whisper in Infrastructure Chaos

Zenduty: The Whisper in Infrastructure Chaos

There's a particular shade of blue that haunts me – the exact hue of our monitoring dashboard when critical systems flatline. I remember clutching my lukewarm coffee, watching service maps bleed crimson as our European CDN nodes dropped offline during peak shopping hours. My Slack exploded with panic emojis before I could even reach for my phone. Then, a vibration cut through the chaos: not the usual cacophony of disjointed PagerDuty alerts, but a single, curated pulse from Zenduty. It felt like grabbing a lifeline thrown into stormy seas.

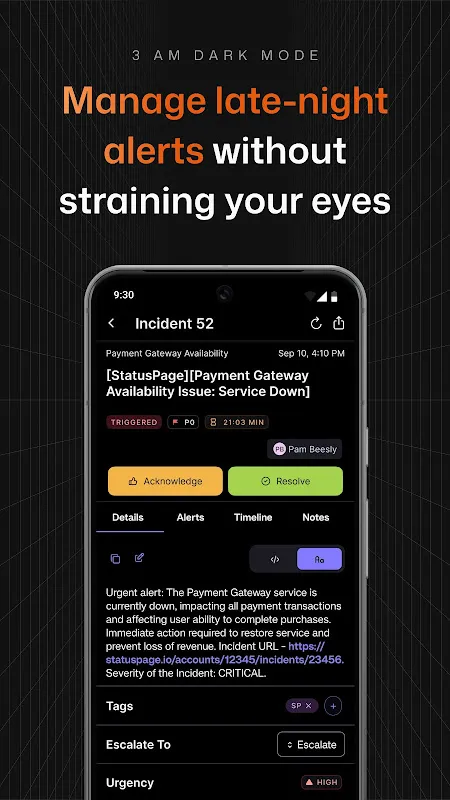

What happened next wasn't just notification; it was orchestrated warfare against downtime. While colleagues scrambled across seven different communication channels, Zenduty's mobile interface became my war room. With three taps, I silenced the noise – muting redundant Nagios alerts that usually drowned signal in noise. The platform had already correlated logs from CloudWatch, Dynatrace, and our custom Python monitors, pinpointing the root cause: a misconfigured AWS transit gateway update. No human could've connected those dots that fast. I watched in real-time as it auto-assigned tasks: database rollback to Maria, DNS failover to Javier, while simultaneously updating our status page. The precision felt almost surgical.

Here's where it got visceral: Zenduty didn't just escalate – it learned. When Javier missed the first Slack alert (his toddler was having a meltdown), the system instantly triggered his designated backup via WhatsApp voice call while simultaneously texting his secondary contact. All this unfolded as I stood frozen in my kitchen, apron still tied, staring at the glowing rectangle in my palm. The bitter taste of dread faded as green status lights flickered back across the service map. Customers never knew how close we came to catastrophe.

But let's talk about the ugly parts. Configuring those automated runbooks? Pure agony. I spent three Sundays debugging YAML files that made me question my career choices. The mobile interface's "incident timeline" view becomes a Picasso painting during complex outages – too many overlapping actions crammed into too little space. And don't get me started on their pricing tiers; scaling up feels like being shaken down by digital highwaymen. Yet when our Kafka clusters imploded last quarter, I'd have paid double just to feel that cool, algorithmic certainty guiding us through the wreckage.

What haunts me now isn't failure – it's the ghost of our pre-Zenduty existence. I still flinch when phones ring after midnight, muscle memory from nights spent manually bridging conference calls while reading error logs aloud like some tech exorcism ritual. Last Tuesday, when our API gateway choked during a funding announcement, I actually smiled. Zenduty had already spun up parallel environments before our CTO finished his first curse word. That's the real magic: it transforms panic into mere inconvenience. I still drink too much coffee, but now it's accompanied by something unfamiliar – quiet confidence.

Keywords:Zenduty,news,incident management,DevOps automation,alert fatigue