AlertOps: Tame Alert Storms & Slash MTTR with Intelligent Incident Routing

After three consecutive nights of being jarred awake by irrelevant system alerts while my critical database issue went unnoticed, I was ready to quit DevOps. That's when our CTO mandated AlertOps. The transformation wasn't gradual - it was instantaneous relief. This brilliant incident management platform doesn't just forward notifications; it surgically delivers them to the right engineer at the right time, finally letting us focus on solutions instead of alert triage.

The moment I configured smart routing rules, our team's collective shoulders dropped. Last Thursday at 3:17 AM when our payment gateway faltered, I watched in awe as alerts bypassed 15 engineers to reach Maria's phone - the only person certified on that subsystem and actually on-call. The precision felt like having an expert conductor orchestrating our response team, eliminating those chaotic group notifications where everyone assumes someone else will act.

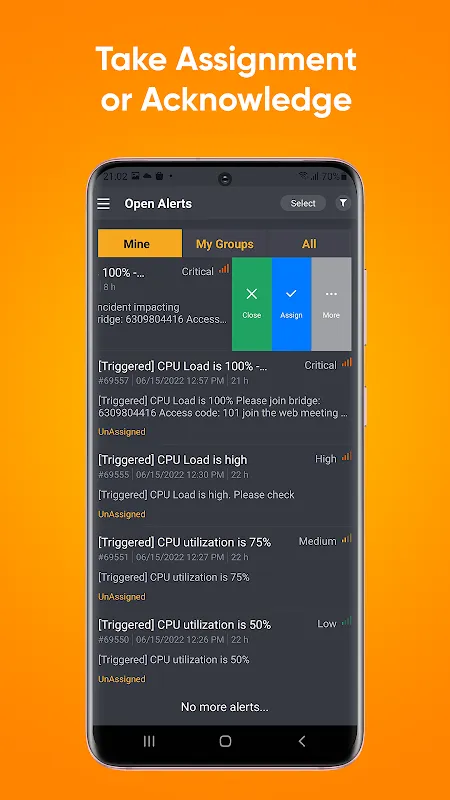

What truly reshaped our workflow was the push notification actions. During a hurricane-induced outage, I was coordinating from my car when the storage array alerts flooded in. Without even unlocking my phone, I reassigned three critical tickets directly from the notification shade while windshield wipers battled the downpour. That tactile efficiency - swiping and tapping on glowing icons in the dark - turned potential hours of downtime into minutes.

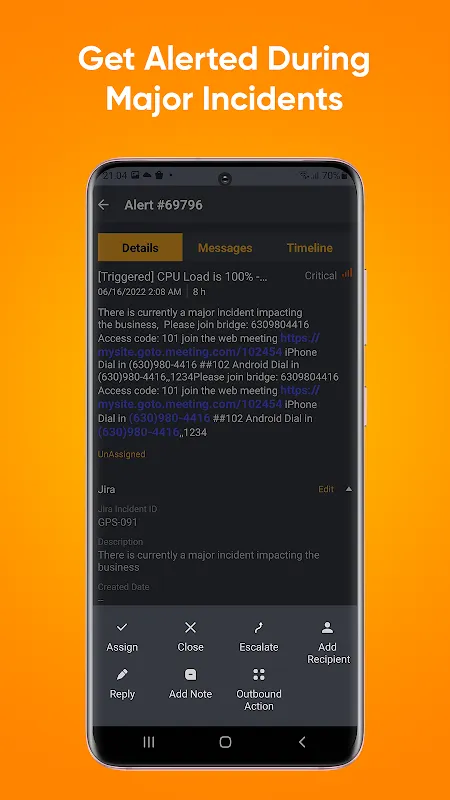

I've grown dependent on the resolution audit trail feature. When our European team accidentally triggered false alerts last quarter, I could trace exactly how each ticket moved through John's diagnosis, Sofia's escalation, and Amir's solution - all timestamped with technical notes. It transformed post-mortems from blame sessions into learning opportunities, with every decision visible like layers in an archaeological dig.

At 11:30 PM during tax season peak load, red alerts started screaming across our monitoring wall. My fingers trembled slightly as I tapped the "escalate" button on a latency spike alert, instantly routing it to the network specialist despite being off-duty. Within 90 seconds, his response appeared in the unified message thread: "Router BGP flap - mitigated." That rapid-fire collaboration without app-switching felt like passing a baton in a well-practiced relay team.

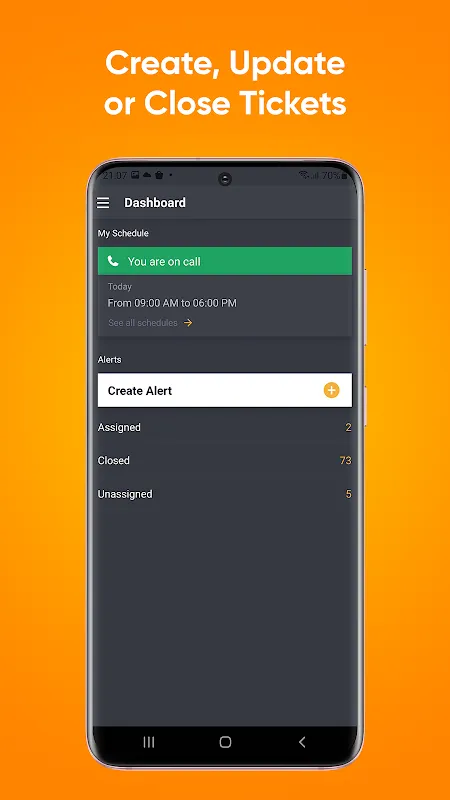

The multi-tiered escalation system saved our holiday launch. When primary on-call missed a Cassandra alert during his daughter's recital, the system automatically pinged three backups in sequence. By tier three, our lead architect received the alert with attached diagnostic graphs. Watching the escalation path light up on my dashboard - green to yellow to red - gave visceral comfort that failures couldn't slip through cracks anymore.

My sole frustration emerges during complex cross-team incidents. While the Android app handles individual alerts beautifully, coordinating multi-service outages still requires desktop access for visual mapping. I'd sacrifice some notification prettiness for integrated dependency diagrams. Yet this feels minor when weighed against waking up to zero overnight alerts for six consecutive weeks - a previously unimaginable luxury.

For teams drowning in PagerDuty spam or struggling with war room chaos, AlertOps isn't just useful - it's career-saving infrastructure. The magic isn't in flashy dashboards but in its surgical precision to deliver the right alert to the right human. After 18 months of use, I still feel quiet gratitude each time my phone lights up with an alert that actually matters to me.

Keywords: incident management, alert routing, on-call scheduling, MTTR reduction, push notifications