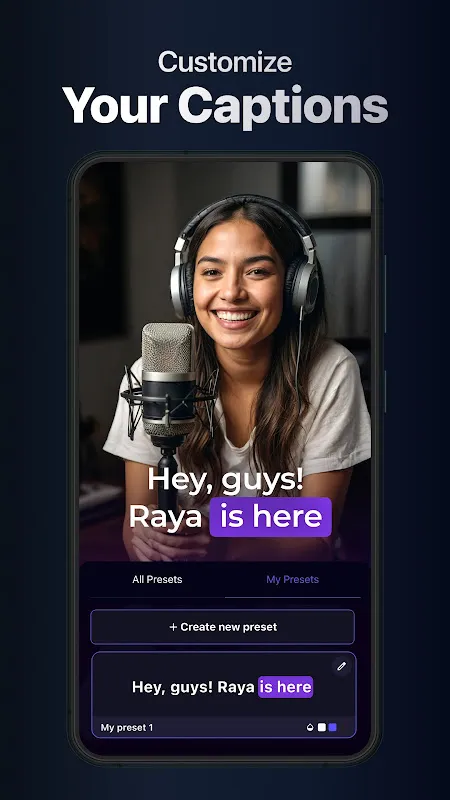

Dubs Freed My Captioned Soul

Dubs Freed My Captioned Soul

Rain lashed against my office window as I slumped over my laptop, fingers trembling over the keyboard. Another client deadline loomed in 90 minutes, and my latest explainer video—a 22-minute beast—sat silently on screen, its raw footage mocking me. I’d spent three days scripting, filming, and editing, only to realize I’d forgotten the captions. Again. My throat tightened; manual transcription meant typing through lunch, canceling my daughter’s school play, and another apology text to my wife. That’s when Mia, my eternally calm colleague, slid her phone across my desk. "Try this," she murmured. "It eats audio for breakfast." Skepticism warred with desperation as I downloaded the app—no tutorial, no fanfare. I uploaded the video file, pressed a single button, and watched in disbelief as words materialized on screen, perfectly synced to my own voice discussing market analytics. Ten minutes. Not hours. Not even thirty. Real-time speech dissection wasn’t just a feature; it felt like digital telepathy.

The first time I used it properly, chaos reigned. My home office doubled as a playground—my toddler’s squeals blended with blaring cartoons from the living room. I’d recorded a crucial segment beside an open window during a thunderstorm, certain the audio was garbage. But Dubs didn’t flinch. It parsed my voice through rain pelting the glass and my son’s off-key rendition of "Twinkle Twinkle," producing crisp text while flagging sections with background interference. That’s when I dug deeper. Unlike basic transcription tools, this thing used contextual noise isolation algorithms—layers of neural networks distinguishing primary speech from ambient chaos. It learned dialects too; my Scottish client’s thick brogue, which once required three rewinds per sentence, now translated seamlessly. I’d whisper-test it sometimes: "Revenue projections are… biscuit?" Just to see. It always corrected me. Always.

But let’s not pretend it’s flawless. Last month, during a webinar recording, it turned "quantitative easing" into "quantum sneezing"—a glitch that nearly made me spit out my coffee. Editing those captions felt like defusing a bomb; one misplaced keystroke could ruin the flow. Yet even here, the app’s rhythm detection surprised me. It didn’t just transcribe—it emulated human cadence, inserting natural pauses where I took breaths, breaking sentences where inflection dipped. Fixing errors became less surgery and more tweaking, like adjusting punctuation in a lively email. And when my battery died mid-process during a train ride? Auto-sync resurrected everything without losing a syllable. That’s witchcraft disguised as cloud storage.

Freedom arrived subtly. No more midnight captioning marathons with red-rimmed eyes. Instead, I’d finish edits, hit export, and actually walk away. One Tuesday, I used the reclaimed hour to teach my kid chess. His triumphant "Checkmate!" echoed louder than any notification ping. The app didn’t just shave time—it carved space for living. Still, I rage-quit once when it ignored my emphatic hand gestures during a gesture-heavy tutorial. Captions can’t read body language… yet. But when it flawlessly captured my whispered pep talk before a big pitch? "You’ve got this, you magnificent disaster." I kept that typo-free gem as a screensaver. Raw humanity, perfectly preserved.

Keywords:Dubs,news,AI transcription,content creation,time saving