Editing Hope: An AI Photo Tale

Editing Hope: An AI Photo Tale

Rain lashed against the shelter's window as I crouched on the concrete floor, camera trembling in my hands. Midnight – a pitch-black stray with eyes like liquid gold – kept darting behind donation boxes. Every shot showed peeling walls and stacked crates, making potential adopters scroll past her photos online. My chest tightened; this was her third week here. That's when Sarah from the volunteer group texted: "Try that new AI thing – slices backgrounds like butter."

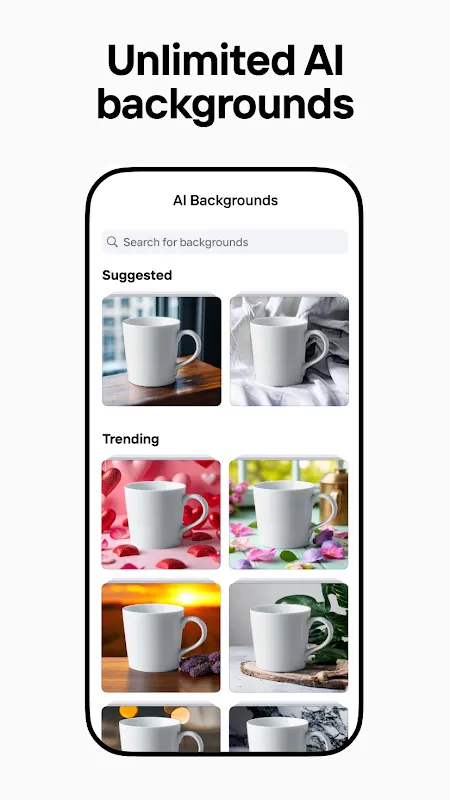

Downloading Photoroom felt like gambling my last dollar. The tutorial popped up, but I swiped it away – desperation doesn’t read manuals. I stabbed at Midnight’s least-blurry photo. One tap. Suddenly, the visual chaos evaporated like steam from a manhole. Just her, suspended in pearlescent white. My breath hitched. That wasn’t editing; it was digital alchemy. The app didn’t just remove backgrounds – it erased despair.

But magic has seams. When I fed it a shot of Midnight curled on a navy blanket, the AI got confused. Her inky fur bled into the fabric, creating jagged halos. "What a piece of junk!" I hissed, slamming my phone on the table. The shelter’s ancient terrier, Buddy, startled awake. Turns out neural networks trained on contrast-rich images choke on low-light gradients – a flaw buried in their convolutional layers. I cranked the exposure sliders raw until her silhouette emerged crisp against lemon-yellow. The app’s genius wasn’t perfection; it was salvage speed. Five minutes instead of five hours in Photoshop.

Next morning, I uploaded the polished gallery: Midnight gazing from sun-dappled grass, perched on faux marble. Within hours, notifications exploded. A family wanted her. Watching them cradle her in the adoption room, I finally exhaled. Photoroom’s semantic segmentation – that invisible lattice identifying "cat" versus "not cat" – did more than clean images. It rewrote futures. Still, I cursed its subscription model later. $10/month stings when you’re volunteering.

Now I stalk shelter corners like a tech-enabled paparazzo. When Whisper, a scarred greyhound, shied from the lens, I snapped him mid-shiver against chain-link fencing. Photoroom’s batch processing vaporized the metal grid, dropping him onto autumn leaves. That generative fill witchcraft? It’s not guessing pixels – it’s plundering image databases to hallucinate plausible foliage. Ethical quicksand, maybe. But when his adoption post trended? Zero regrets.

Yesterday broke me though. Luna, a three-legged kitten, played in donated Christmas tinsel. The AI mistook her prosthetic for debris, leaving jagged holes in her fur. "You blind algorithm!" I screamed at the screen. Two hours of manual brushing tools later, she sat whole in a snowy digital wonderland. Photoroom giveth and taketh away – its machine learning gods demand sacrifice. Still, seeing Luna’s "adopted" tag this morning? Worth every glitch.

This app didn’t just change my editing workflow; it rewired my nervous system. I catch myself squinting at café menus, mentally erasing text with an imaginary "clean" button. Real magic isn’t flawless code – it’s the milliseconds between tapping "process" and witnessing impossible transformations. Even when it fails, the sheer audacity of attempting real-time background annihilation leaves me vibrating. Now if they’d just fix those shadow artifacts…

Keywords:Photoroom AI Photo Editor,news,animal rescue photography,AI editing flaws,semantic segmentation,background removal