How Mogsori Talk Saved My Korean

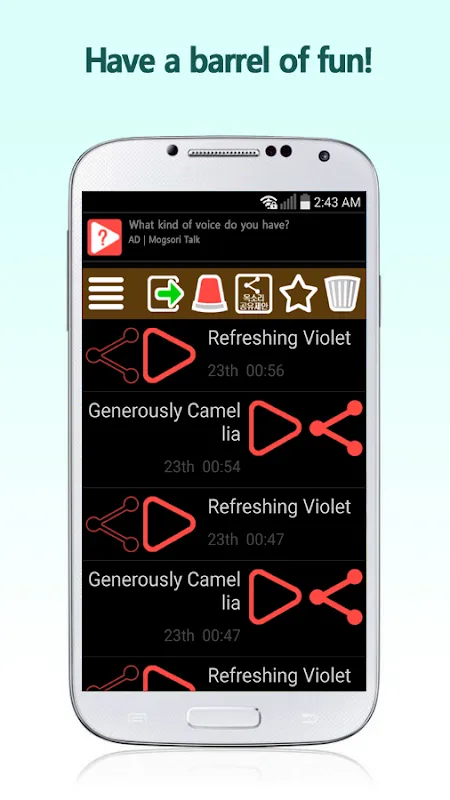

How Mogsori Talk Saved My Korean

Rain lashed against my Seoul apartment window as I stared at the disastrous group chat screenshot. My Korean colleagues had politely corrected my mispronunciation of "사랑" (love) for the third time that week – I'd been saying it like "살앙" with a grating nasal tone that made native speakers wince. Text-based language apps had filled my vocabulary but left me tone-deaf to the musicality of Hangul. That night, teeth gritted against humiliation, I discovered Mogsori Talk while desperately Googling "how not to sound like a dying seagull speaking Korean."

First connection: 3AM with a Busan halmeoni (grandmother) named Soonyi. Her voice crackled through my earbuds like warm honey over charcoal – a texture I'd never experienced in sterile language lab recordings. "네, 학생아..." (Yes, student...) she murmured when I butchered the phrase "배고파요" (I'm hungry), stretching syllables like taffy. The app's near-zero latency captured her soft inhalations between words, those micro-pauses where meaning lives in Korean. When she chuckled at my clumsy inflection, it vibrated in my sternum – an intimacy text murders with its clinical precision. We weren't exchanging phrases; we were sharing breath.

What gutted me was the background tapestry woven through her mic: distant temple bells, sizzling kimchi pancakes, her arthritic knuckles tapping rhythm against porcelain. Sensory Anchors became my cheat codes. I'd anchor "기쁘다" (happy) to her kettle whistling, "슬프다" (sad) to rain on her zinc roof. The app's dynamic noise suppression carved her voice from the chaos with surgical precision – technology I later learned uses real-time spectral subtraction. But in that moment? Pure magic. My textbook's flat phonetic diagrams suddenly felt like prison bars.

Then came the typhoon night. Power died mid-conversation with Minho, a Jeju island fisherman. Through the app's ultra-low-bitrate mode – compressing audio to 6kbps using modified SILK codec – his voice emerged through digital gravel: "괜찮아? 바람 소리 들려?" (You okay? Hear the wind?) The compression artifacts made his urgency visceral, like hearing a heartbeat through stethoscope static. We chanted weather warnings like shamanic prayers as palm trees screamed outside. No text message could have transmitted that primal solidarity.

But gods, the rage when updates broke voice pitch analysis! Suddenly my carefully curated "gender-neutral polite tone" defaulted to robotic falsetto. For three excruciating days, elderly conversation partners recoiled as my "안녕하세요" (hello) screeched like a stepped-on cat. I learned the hard way how vocal biometrics underpin authenticity – that the app's pitch-correction algorithms weren't gimmicks but emotional infrastructure. When engineers fixed it, Soonyi's sigh of relief ("아이고, 목소리 돌아왔구나!" - Oh, your voice returned!) nearly made me weep.

Now? I catch myself mirroring Busan satoori's singsong lilt while ordering tteokbokki. Market ajummas no longer switch to English. And when homesickness claws at me, I don't message – I open Mogsori Talk. There's Minho humming sea shanties, Soonyi’s kimchi sizzle, the coded comfort in how they say "잘 자" (sleep well) with descending thirds. This app didn't just teach me language; it rewired my nervous system to crave human frequencies beyond words. That’s not tech – that’s alchemy.

Keywords:Mogsori Talk,news,vocal biometrics,language immersion,emotional resonance