Live Captions Saved My Demo Disaster

Live Captions Saved My Demo Disaster

Sweat pooled at my collar as 200 expectant faces stared at my trembling hands. The community center's annual food festival was supposed to be my big break - a live kimchi-making demo that could triple my YouTube following. But the moment I stepped into that echoing hall, panic seized my throat. Between roaring ventilation fans and clattering serving trays, I realized nobody would hear my fermentation tips. My notes blurred as stage lights hit my eyes, fingers fumbling with chili paste jars. Then I remembered the unassuming app I'd tested days earlier: AutoCap Captions Teleprompter.

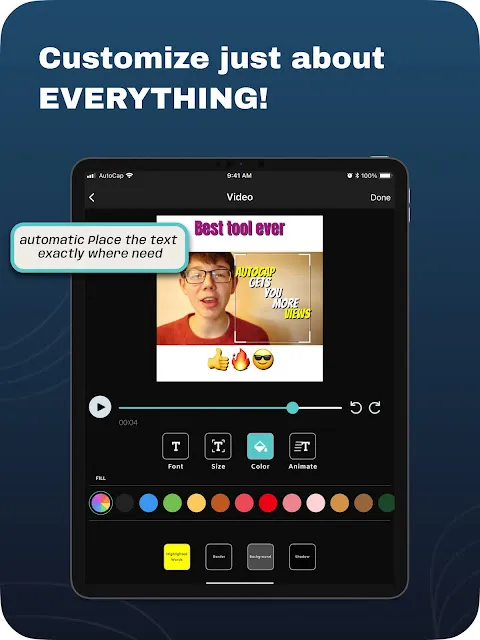

With thirty seconds to spare, I slapped my tablet onto the demo table and hit record. What happened next felt like technological sorcery. As I choked out my first words about brine salinity, real-time speech recognition translated my shaky voice into dancing yellow text across the screen. The audience's confused frowns melted into focused attention as animated Hangul characters materialized mid-air. When my microphone crackled during the gochugaru measurement explanation, the captions kept flowing flawlessly - my safety net against audio disaster.

You haven't lived until you've seen sixty seniors collectively lean forward to read your captioned warning about over-fermentation risks. The magic happened in the background processing: while I demonstrated cabbage massaging techniques, the app was continuously analyzing phonetic patterns through overlapping noise layers. Its algorithms distinguished my vocal fry from the espresso machine's screech next booth - mostly. During the garlic mincing segment, it hilariously interpreted my knife thuds as "aggressive chopping therapy recommended," triggering the first of many belly laughs.

What truly stunned me was the post-event revelation. While packing my gochujang jars, three attendees approached praising how the adaptive caption positioning helped them follow steps despite visual impairments. The app had dynamically relocated text blocks away from my hands' movement areas - a feature I'd never consciously noticed. That's when I grasped this wasn't just convenience tech; it was accessibility revolution disguised as content tool. My earlier resentment about its subscription cost evaporated faster than rice vinegar on hot stone.

Of course, we had our messy moments. During the taste-testing Q&A, simultaneous audience questions created caption chaos - a psychedelic word tornado that looked like abstract poetry. The app still can't handle cross-talk, a brutal limitation during interactive segments. Yet watching my demo video later, I caught details even I'd forgotten: the exact second I recommended swapping shrimp paste for vegetarian alternatives, perfectly timestamped in the caption archive. That single recording garnered more engagement than my last six edited tutorials combined.

Now my tablet stays mounted beside my cutting board like a kitchen ghostwriter. It remembers measurements I forget, catches technique nuances I omit, and occasionally roasts my pronunciation with creative interpretations. Yesterday it transcribed "caramelization" as "carnival vacation" - a glitch that strangely inspired my next video theme. We've developed this odd symbiosis where I speak to the captions as much as to the camera, anticipating their rhythmic appearance like musical notes. They've become my silent co-host, my safety harness when culinary anxiety strikes, and unexpectedly, my most brutally honest editor.

Keywords:AutoCap Captions Teleprompter,news,live cooking demo,real-time transcription,accessibility tech