Midnight Meltdown to Morning Masterpiece

Midnight Meltdown to Morning Masterpiece

Deadline dread tasted like stale coffee and panic sweat as I glared at my monitor. The client wanted a complete restaurant rebrand by sunrise – logo, menu, interior concepts – and my brain had flatlined. My usual workflow felt like trying to sculpt fog: Pinterest tabs multiplied like gremlins, color palettes clashed violently, and every font looked like it was mocking me. That's when my trembling fingers typed "design rescue" into the App Store, desperate for anything resembling creative CPR.

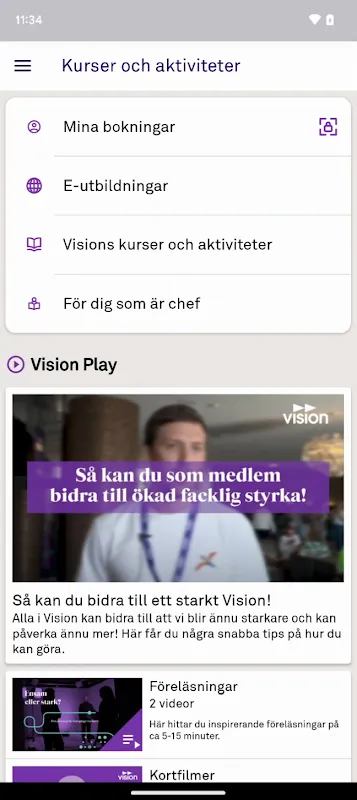

Within minutes, Vision reshaped my chaos. Not with overwhelming options, but with intelligent curation. Instead of dumping 10,000 serif fonts on me, it asked three questions about the restaurant's personality ("rustic? playful? upscale?") and served five perfect typography pairings with scientific explanations about x-height ratios and optical spacing. That technical transparency shocked me – normally design apps treat their algorithms like nuclear codes. Here was a tool explaining why certain curves evoked warmth while sharp angles suggested precision, making me feel less like an imposter clicking randomly.

Around 2 AM, magic happened. I snapped a photo of my cat's paw print in spilled coffee (don't ask). Vision didn't just crop it. Its pattern engine analyzed the organic shapes, then generated custom repeat motifs while preserving the stain's watercolor texture. Under the hood, it was clearly using some neural style transfer witchcraft, but the UX felt like collaborating with an intuitive partner. When I tweaked the symmetry slider, I could practically hear the algorithm recalculating fractal patterns in real-time. Most "smart" tools feel like stubborn interns – this responded like a jazz musician riffing on my half-baked ideas.

The real gut-punch came with mood boards. Instead of static images, Vision created interactive collages where tapping a ceramic plate pulled up matching local suppliers, or hovering over a color swatch revealed its Pantone code and complementary shades. Suddenly I wasn't just making pretty pictures – I was building a functional ecosystem where every visual decision linked to real-world execution. When I hesitantly hit "present," the app didn't just export PDFs. It generated a mini-website with animated transitions between concepts, explaining the design narrative in client-friendly language. Watching their eyes widen on Zoom was better than three espresso shots.

Of course, it wasn't all rainbows. The subscription cost made me choke, and the auto-save feature once failed during a lightning storm, vaporizing 47 minutes of painstaking layer adjustments. Vision's insistence on "harmonious minimalism" also occasionally felt restrictive – trying to design a psychedelic taco truck theme triggered so many "aesthetic conflict" warnings you'd think I'd insulted its grandmother. And don't get me started on the iPad version's janky palm rejection, which turned elegant strokes into toddler scribbles.

But here's the raw truth: at 5:47 AM, when I attached files to an email labeled "FINAL_FINAL_REALLYFINAL," I didn't feel drained. I felt like a sculptor who'd discovered power tools. Vision didn't just rescue my deadline – it rewired my creative process. Now when inspiration stalls, I don't panic. I grab messy snapshots, dump half-formed thoughts into its canvas, and trust that structured serendipity will bridge the gap between my scrambled neurons and polished execution. The coffee still tastes terrible though.

Keywords:Vision,news,creative workflow,design algorithms,client presentations,UX transparency