Midnight Pointer Epiphany

Midnight Pointer Epiphany

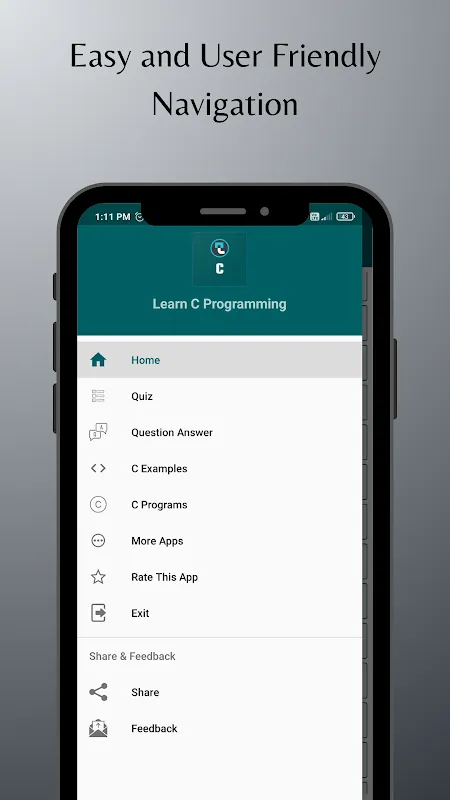

Rain lashed against my apartment windows as I hunched over the laptop, debugging logs blurring before sleep-deprived eyes. That damned segmentation fault haunted my project for three straight nights - some ghost in the machine corrupting sensor data from our agricultural drones. Each core dump pointed toward pointer arithmetic gone wrong, but tracing the memory addresses felt like chasing shadows. My coffee had gone cold when I remembered the Learn C Programming app buried in my phone's "Productivity" folder (a tragically optimistic categorization).

What happened next wasn't magic but meticulous engineering. The app's memory visualizer transformed abstract addresses into color-coded blocks that physically pulsed when dereferenced. I drew pointer paths with my finger like connecting stars in a constellation - watching how *(sensor_array + i) actually crawled through adjacent memory slots. When I modified the offset calculation, the visualization immediately showed how the pointer now landed precisely on the temperature field instead of overflowing into calibration data. This tactile feedback loop finally made decades of textbook diagrams click - pointers weren't theoretical monsters but spatial relationships I could manipulate.

At 3:17 AM, I actually laughed aloud when the drone simulator processed a full dataset without crashing. The app's offline compiler had caught my attempted shortcut of casting void pointers without proper alignment - an error GCC only revealed through cryptic warnings. Its error explanations dissected the hardware reality: how ARM processors require word-aligned memory access and why my lazy cast violated that. This wasn't just syntax correction but computer architecture education condensed into three bullet points with stack diagrams. My university professors never explained the silicon consequences of taking shortcuts.

Yet for all its brilliance, the app's file handling module nearly made me throw my phone. Working with SD card logs required battling its stubborn insistence on POSIX paths while my embedded system used FAT32 shortnames. The tutorial demanded absolute paths like "/mnt/sdcard/logs.csv" when my device only recognized "SD:/LOGS.CSV". After fifteen minutes of failed fopen() attempts, I discovered the workaround buried in a forum post - prepending "file://" before paths. That triumph tasted bitter when the app later crashed upon closing large CSV files, wiping unsaved code snippets. Such platform-specific oversights felt jarring for a tool otherwise meticulous about portability.

During my morning commute, I used the app's code playground to stress-test buffer handling - watching real-time memory allocation graphs while flood-filling a 10,000-element array. Seeing the heap consumption spike then plateau when I implemented ring buffers gave visceral satisfaction. That tactile understanding stuck better than any lecture on malloc() fragmentation ever could. By the time the train reached downtown, I'd redesigned our sensor parser using mmap() - a technique I'd avoided for years but now grasped through the app's filesystem animation showing memory-mapped I/O bypassing kernel buffers.

What astonishes me isn't that I solved the bug, but how this unassuming tool reshaped my neural pathways. When I now encounter complex pointer chains, my mind instinctively renders them as those pulsating blue blocks from the app. Its genius lies in weaponizing mobile limitations - the touch interface forces direct manipulation of concepts I'd previously abstracted away. My only regret? That this didn't exist during my university days when pointer anxiety kept me from pursuing embedded systems sooner. Some lessons arrive precisely when desperation makes the mind receptive.

Keywords:Learn C Programming,news,pointer arithmetic,offline compiler,embedded development