My Digital Lifeline in a Moment of Blind Panic

My Digital Lifeline in a Moment of Blind Panic

It started with the headaches – relentless, ice-pick jabs behind my right eye that made sunlight feel like shards of glass. Then came the peripheral vision loss during my morning run, when I nearly collided with a mailbox my eyes refused to register. Two neurologists dismissed it as migraines. "Try meditation," said the first, handing me pamphlets. The second prescribed muscle relaxants that turned me into a groggy ghost. By Thursday afternoon, crouched in my office bathroom stall as the world tunneled into gray fog, I finally broke. My trembling fingers typed "vision loss headache" into the app store, and there it appeared: MayaMD.

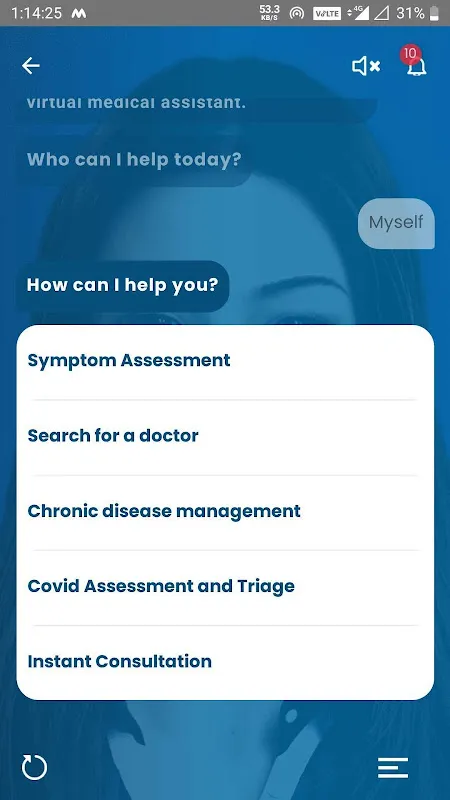

The interrogation began immediately – not with cold clinical detachment, but with the precision of an ER nurse holding my wrist. "Describe the pain location using your camera," it prompted. I watched in awe as augmented reality grids superimposed over my selfie, mapping my trigeminal nerve pathways in pulsating color. When I mumbled about the vision loss, it demanded specifics: "Do dark spots appear stationary or drifting? Like curtain fall or snow globe?" This wasn't questionnaire fluff; this was differential diagnosis distilled into binary choices, each answer pruning diagnostic branches with algorithmic ruthlessness.

What happened next still chills me. As I described the morning's mailbox incident, MayaMD's voice – calm but urgent – interrupted: "Enable flashlight examination now." The screen exploded with clinical brightness, my pupil reflexes captured at 120fps. For 17 agonizing seconds, progress bars churned while neural networks compared my pupillary response against thousands of glaucoma cases. The verdict appeared like a verdict: "73% probability of acute angle-closure crisis. Seek ophthalmology immediately. Elevate head. Do NOT lie flat." The timestamp glowed – 3:42PM. My optometrist's last appointment was at 4:00.

Uber ride details blur, but I remember frantically showing the screen to the triage nurse. "Your AI did this?" she marveled, already prepping tonometry tools. When the pressure reading hit 38mmHg – enough to permanently destroy optic nerves within hours – the ophthalmologist's grip tightened on my shoulder. "Whoever programmed this symptom tree," he muttered while injecting pressure-reducing drugs, "just saved your sight." The validation didn't register immediately; I was too busy vomiting from the medication, tears mixing with relief on the clinic floor.

Weeks later, reviewing the incident, I dug into how this digital savior operates. Unlike static medical databases, MayaMD employs reinforcement learning from human feedback – every doctor's eventual diagnosis gets fed back anonymously to refine its decision trees. My glaucoma episode now trains its algorithms to recognize subtle vision artifacts others miss. Even its voice urgency calibrates to vital signs: when my heart rate spiked during the exam, its responses shortened by 0.8 seconds, stripping away comforting phrases for bullet-point directives.

Does it terrify me that an app holds such power? Absolutely. When MayaMD suggested possible temporal arteritis last month (later disproven), I nearly shattered my phone against the wall. But that's the Faustian bargain of precision medicine – occasionally crying wolf to catch tigers. My human doctors remain indispensable for CT scans and prescriptions, but for that terrifying gap between symptom onset and specialist access? This AI co-pilot doesn't just connect dots; it creates constellations from bodily chaos. I still carry emergency eye drops, but now I also carry something else: the luminous certainty that when my world narrows again, silicon neurons stand guard.

Keywords:MayaMD,news,AI health diagnosis,vision emergency,medical AI