My Messy Moments Turned Magical with Vidma

My Messy Moments Turned Magical with Vidma

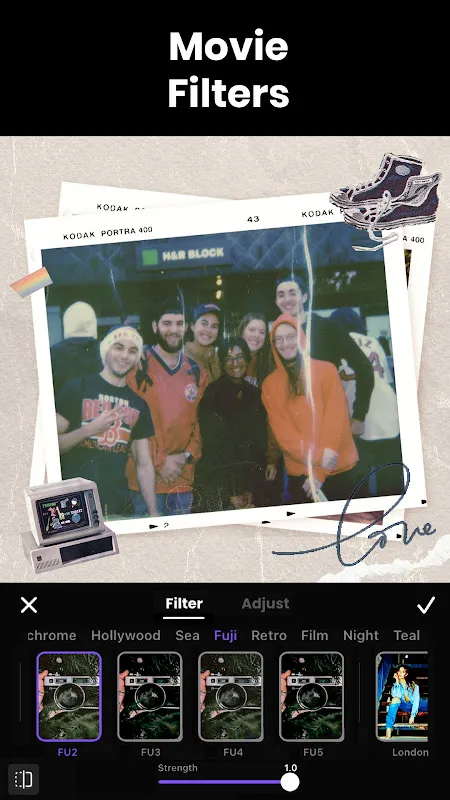

Rain lashed against my apartment window as I scrolled through my phone gallery, a graveyard of forgotten moments. That Bali waterfall clip? Half my thumb blocking the lens. My niece's birthday? A shaky mess where the cake toppled mid-shot. Each video felt like a crumpled postcard—vibrant but ruined. Then I remembered that blue icon tucked in my productivity folder. What the hell, I thought, dragging a chaotic 47-second clip of my dog chasing seagulls into Vidma Cut AI. Three taps later, magic happened.

The interface greeted me like a calm friend after a storm. No intimidating timelines, just a soothing gradient background and a "Create Story" button pulsing gently. I selected the seagull fiasco—raw, vertical, and punctuated by my frantic "Bella, no!" shrieks. When I hit "Auto Edit," the screen dimmed. Tiny progress bars danced like fireflies as invisible algorithms dissected frames. Under the hood, it was analyzing motion vectors and audio waveforms, identifying Bella's wagging tail as the hero moment while trashing blurry sand shots. Thirty seconds later, a polished 15-second sequence emerged: slow-mo paws skidding on wet sand, seagulls lifting off in perfect sync to the background music's crescendo, even my panicked voice softened into a warm chuckle. Tears pricked my eyes. That chaotic memory now felt like a Pixar short.

Next, I got reckless. I dumped 12 GB of disconnected travel clips—Tokyo neon, Icelandic glaciers, a disastrous pasta-making attempt—into Vidma. The "AI Montage" feature promised cohesion. What it delivered felt like digital witchcraft. Using semantic analysis, it grouped scenes by color palettes and emotions: icy blues for Iceland merged into Tokyo's electric purples via a dissolve that mimicked melting ice. My flour-covered face became a comic transition between bustling Shibuya crossing and silent northern lights. The algorithm even detected ambient sounds—sizzling ramen broth, cracking glaciers—and weaved them into the soundtrack's rhythm. But here’s where rage flared: exporting this masterpiece demanded Wi-Fi stronger than a nuclear reactor. My rural connection choked, crashing twice during render. I nearly spiked my phone into the couch cushions.

Undeterred, I tackled my biggest shame: vertical videos. Vidma’s "AI Reframe" claimed to fix portrait-mode sins. I fed it a cringey clip of me attempting flamenco—shot vertically, my feet cropped mid-calf. The app scanned limb positions using pose estimation tech, then dynamically reframed shots horizontally by tracking my arm swirls. Suddenly, my awkward stomping filled the widescreen frame gracefully. Yet the AI couldn’t salvage dignity. My flailing elbows triggered accidental zooms, making me look like a derailed windmill. I laughed until my ribs ached, then angrily deleted evidence before my dance teacher could see.

Now, Vidma lives on my home screen. It resurrects buried memories, yes—but it also exposes tech's brutal limits. When algorithms misinterpret joy as chaos or WiFi betrays ambition, frustration bites hard. Still, watching Bella’s seagull chase play smoothly on my TV last week? Pure dopamine. This isn’t just an editor; it’s a tiny robot poet translating my messy humanity into visual sonnets. Even when it trips.

Keywords:Vidma Cut AI,news,AI video editing,mobile storytelling,creative tools