My Phone Finally Reads My Mind

My Phone Finally Reads My Mind

Rain lashed against the bus window as I frantically stabbed at my phone's screen, thumb slipping on the condensation. The map app had frozen mid-navigation just as my stop approached, buried beneath three layers of menus. Panic tightened my throat - another missed appointment, another awkward email apology. That's when I discovered the customization beast lurking in developer forums. Installing it felt like performing open-heart surgery on my device, granting permissions that made Android purists gasp.

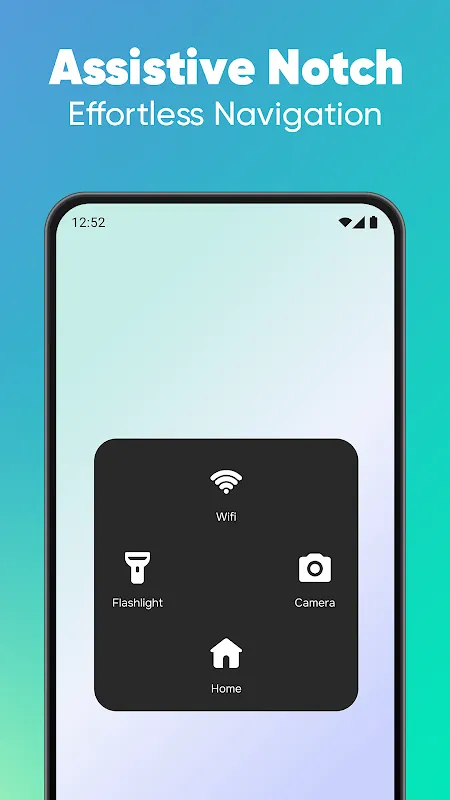

Waking to my fifth alarm snooze ritual, I finally unleashed the control panel during my caffeine-deprived haze. The interface greeted me with intimidating blankness - no friendly tutorials, just a stark grid awaiting commands. My initial attempt created chaos: a swipe gesture accidentally activated flashlight mode during a client video call, illuminating my unshaven chin like a horror movie jump-scare. The app didn't apologize; it demanded precision.

The Breakthrough Moment

Thursday's commute became revelation hour. With thumb muscle-memory now trained, I flicked left from the edge to summon my transit dashboard: real-time bus location hovering above my ebook reader, Spotify controls glowing beneath the map. The magic happened when construction detours appeared - before the automated announcement, my customized alert panel pulsed amber. I executed the emergency protocol: single-tap ride-share shortcut, two-finger swipe to text my boss, all while keeping navigation visible. The woman beside me stared as I orchestrated this symphony without ever leaving my reading app.

What makes this sorcery possible? The app exploits Android's accessibility API in ways that would make Google's engineers sweat. Unlike clunky launchers, it creates a persistent neural overlay - essentially a parallel OS layer intercepting touch events. During setup, I discovered its secret weapon: conditional triggers. My "commute mode" activates automatically when Bluetooth connects to my headphones AND my location velocity exceeds 15mph. The technical elegance hit me when analyzing battery impact - negligible drain because it suspends itself when my phone lies face-down.

Last Tuesday exposed its brutal honesty. Attempting to create gesture-controlled smart home commands, I triggered a cascade failure that rebooted my router. The app offered zero sympathy - just an error log colder than Arctic ice. This thing doesn't coddle; it expects mastery. Yet when I finally nailed the sequence - knuckle tap for thermostat, clockwise circle for lights - the triumph felt like cracking a cryptographic puzzle. My living space bent to my will through glass and code.

The Dark Side of Power

Absolute control reveals uncomfortable truths. I've become disgusted by stock Android's limitations, physically recoiling when using friends' "normie" devices. Their clumsy app-switching feels medieval. Worse still, I catch myself performing phantom gestures on tablets, microwaves, even car dashboards - my neural pathways rewired by this digital prosthetics. The app's developer documentation reads like occult scrolls, demanding sacrifices of time and sanity. Three weekends vanished into tweaking animation curves alone.

Yesterday's existential moment came when the panel anticipated my needs. Heading to the pharmacy, it surfaced my insurance card before I searched. Not through creepy data-mining, but via pattern recognition from previous Thursday 2pm location data. This tool doesn't just obey - it learns. The realization chilled me: my phone now has reflexes faster than my conscious thoughts. We've achieved symbiosis, for better or worse.

Keywords:Custom Control Panel OS,news,Android customization,power user tools,gesture control systems