My Silent Partner in Chaos

My Silent Partner in Chaos

The fluorescent lights hummed like angry hornets overhead, casting stark shadows on the blood-smeared gurney. My fingers trembled as I scrolled through the fourth CT scan of the hour, caffeine jitters mixing with dread. Without warning, the trauma bay doors crashed open—a motorcycle accident victim, skull fractured and pupils uneven. I remember thinking, This is how it happens. How you drown in the flood of beeping monitors and stat pages, how a subtle midline shift on some intern's forgotten scan becomes paralysis for a grandmother because your eyes glazed over at 3 AM. That night, I missed it. The guilt tasted like bile for weeks.

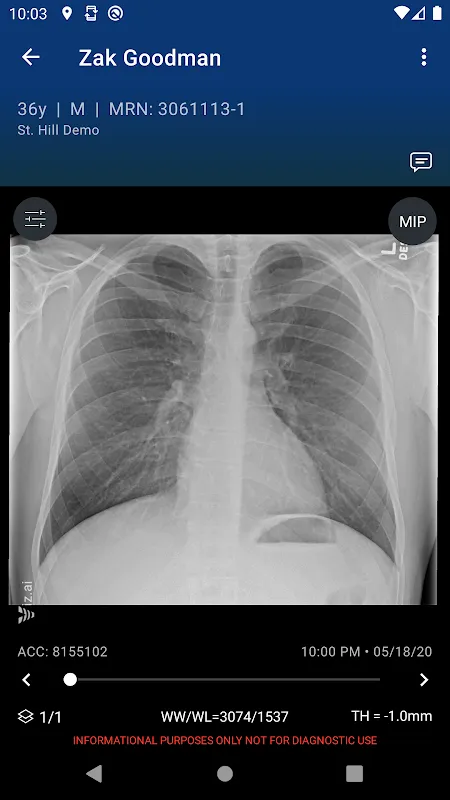

Then came Viz LVO. Not with fanfare, but like a stealthy ally slipping into our PACS system. At first, I scoffed. Another algorithm promising miracles? But during a Tuesday night deluge—three strokes, a coding septic shock, and a toddler seizing—its notification chimed. Not a blaring alarm, but a soft pulse on my phone. It had flagged a basilar tip occlusion in Mr. Henderson’s scan, buried beneath layers of irrelevant data. I froze. The app didn’t just highlight it; it mapped the clot’s density with eerie precision, cross-referencing perfusion studies I hadn’t even ordered yet. Underneath, the tech whispers: deep learning dissecting vascular territories in milliseconds, transforming pixels into prognosis. I sprinted to neurointerventional, shouting for heparin. Later, the patient squeezed my hand. No bile this time—just salt from my own sweat and relief.

But gods, it’s flawed. Last month, during a lull, Viz.ai screamed false positive on a calcification artifact. My pager erupted, nurses scrambled, and we wasted 20 minutes on a ghost. I nearly ripped the tablet from the wall. Why must the interface feel like navigating a labyrinth? Tapping through four menus to mute alerts while a trauma bleeds out is madness. And yet… when Maria Rodriguez arrived, gray and gasping, the platform’s triage hierarchy overrode everything. It tagged her massive PE before radiology even loaded the study, auto-paging cardiology while I secured her airway. That’s the paradox: a tool that’s both scalpel and sledgehammer. The AI’s neural nets—trained on millions of scans—don’t feel fatigue, don’t overlook the left MCA hiding in noise. But they also don’t understand human panic.

Now, I trust it like a junior resident with savant-level instincts. It catches what my burnt-out retinas skip, turning chaos into clean protocols. Yesterday, as hail battered the ER windows, Viz flagged a dissection in a migraine patient. I didn’t hesitate. No second-guessing, no frantic scrolling—just action. The app’s cold logic warmed by the pulse it saves: a father walking his daughter home today because this digital sentinel saw what sleep-deprived humans couldn’t. Still, I curse its glitches. Still, I rely on it. In the end, it’s not about the code. It’s about the moments when technology stops being a tool and becomes the thin line between despair and hope.

Keywords:Viz.ai,news,AI diagnostics,emergency medicine,stroke intervention