NinjaOne: My IT Panic Button

NinjaOne: My IT Panic Button

Rain lashed against the office windows like angry fists when the alerts started screaming. Not the polite chirps of normal notifications – these were digital air raid sirens blaring from every direction. My palms went slick against the mouse as three monitors exploded with red: server room temp critical, VPN tunnel collapsed, and – sweet mother of chaos – the CEO's laptop decided today was resurrection day during his investor pitch. My old toolkit felt like bringing spoons to a gunfight, frantically alt-tabbing between fragmented dashboards while precious seconds bled out. That's when my trembling fingers mashed the NinjaOne icon on my tablet.

What happened next wasn't just relief – it was visceral. Like slamming on noise-canceling headphones in a metal concert. Suddenly all that screaming chaos condensed into a single, breathing organism on my screen. The unified dashboard didn't just show problems; it showed relationships. That server overheating? Its fan metrics correlated perfectly with the VPN gateway's timestamped collapse. NinjaOne's secret sauce isn't the pretty UI – it's how their lightweight agents feed real-time telemetry into a correlation engine that maps infrastructure dependencies like neural pathways. Watching those connection lines pulse between devices felt less like IT work and more like conducting a symphony through the storm.

But here's where the magic turned tactical. Spotting the CEO's laptop blinking red, I dove into NinjaOne's remote toolkit. No clunky VPN reconnect – their direct P2P protocol bypassed our crumbling network like a back alley shortcut. As his frozen presentation screen mirrored onto my tablet, I could almost taste his panic through the pixels. One forced reboot command later, and I heard his strained "it's... working?" through the office walls. That's when I noticed NinjaOne quietly flagging the root cause: an overlooked driver update conflicting with our new security stack. The platform didn't just fix fires – it autopsied the arsonist.

Don't get me wrong – this lifesaver has teeth that bite back. Try customizing their automated patch deployment templates after midnight on three hours sleep. Their YAML-based configuration system feels like solving a Rubik's cube blindfolded when you're exhausted, with error messages about as helpful as a fortune cookie. I've rage-typed more than one support ticket at 3 AM when the scheduler ignored my exclusion groups. Yet even through the gritted teeth, there's grudging respect for how their delta patching algorithm only pushes changed bits instead of full installers – saving us terabytes in bandwidth monthly.

The real gut-punch came weeks later during our "lessons learned" meeting. Pulling up NinjaOne's incident timeline felt like rewatching a disaster movie with director commentary. Heatmaps showed exactly when East Coast logins spiked before the VPN chokehold. Their predictive load modeling – based on historical patterns and real-time SNMP metrics – had actually flagged capacity risks days prior. My cheeks burned realizing we'd dismissed the alert as "overcautious." That's NinjaOne's brutal genius: it remembers your sins. The way its machine learning crunches failure patterns forces uncomfortable accountability no spreadsheet post-mortem ever could.

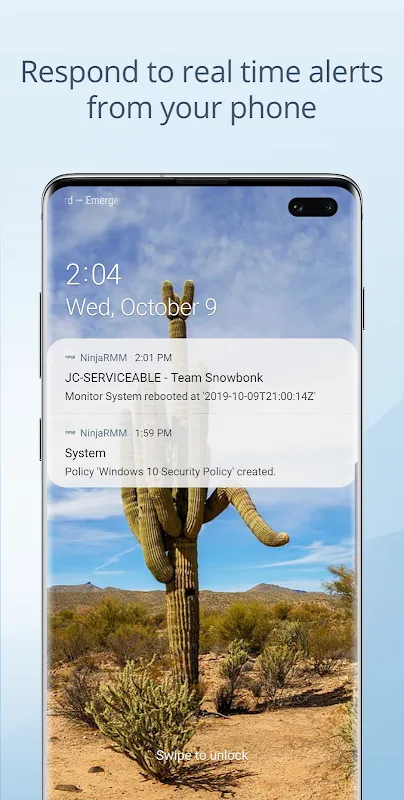

Now when storms brew outside, I catch myself tracing the rain streaks with one eye on NinjaOne's weather map of our network. It's not perfect – god help you if you need custom API integrations without their premium tier – but that single pane of glass has rewired my nervous system. Yesterday a junior tech yelled "Switch 7 just died!" across the bullpen. Didn't even look up from my coffee. Just tapped the NinjaOne widget on my phone, saw its redundant link already carrying traffic, and mumbled "handled" through the steam. The silence that followed tasted like victory.

Keywords:NinjaOne,news,IT infrastructure,incident response,remote management