The Day Silence Broke

The Day Silence Broke

Rain lashed against the clinic windows as I knelt beside Jamie's wheelchair, wiping drool from his chin for the third time that morning. His eyes - those deep ocean-blue pools - held storms of unspoken words. Five years old, non-verbal cerebral palsy, and my little boy trapped behind invisible walls. "Do you want the red truck or blue blocks today, sweetheart?" I asked, holding up both toys. His gaze flickered toward the window, then back to me with that familiar frustration simmering beneath long lashes. Another guessing game destined for failure.

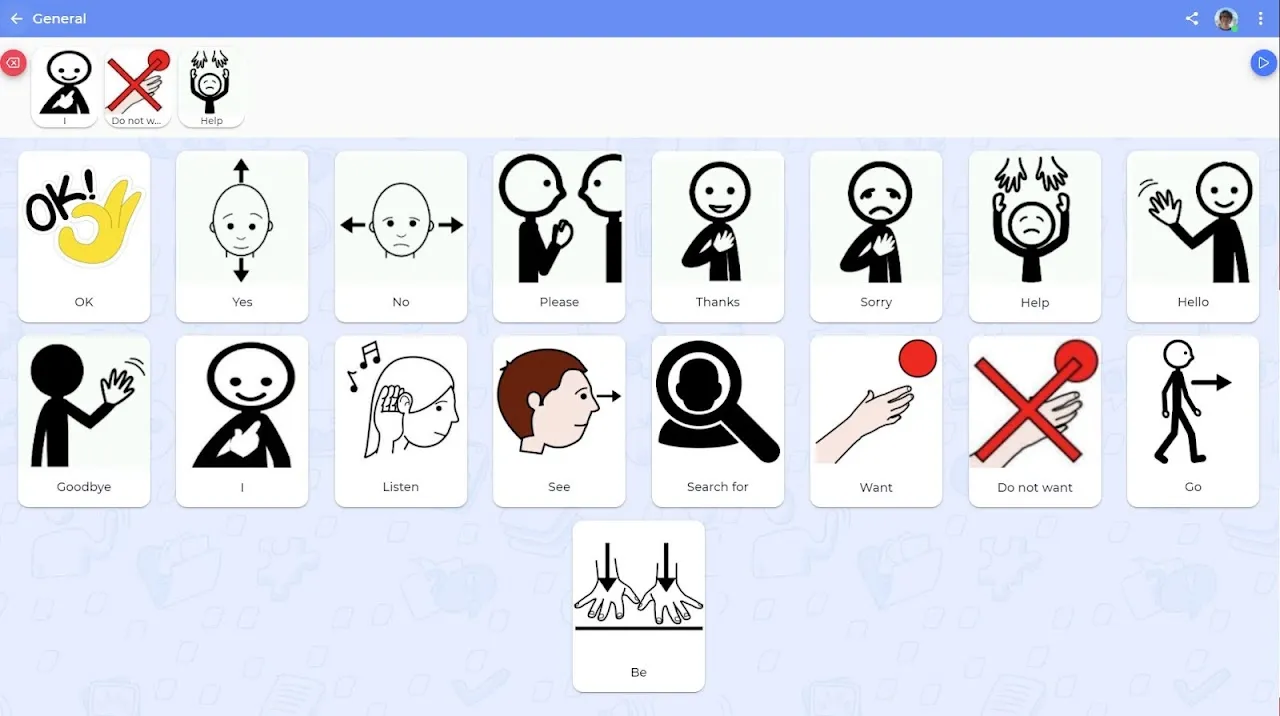

When Dr. Evans suggested Expressia, I nearly laughed. Another app? We'd tried seven already - clunky grid systems, childish cartoons, one that crashed whenever Jamie slammed his fist in excitement. But desperation smells like antiseptic and broken promises, so I downloaded it that night while Jamie slept curled against my chest. First surprise: no garish colors assaulting my sleep-deprived eyes. Just clean grays and intuitive symbols appearing like constellations across the dark interface. Within minutes, I'd created "Jamie's World" - photos of his favorite blanket, the park slide, his therapy dog Benson. The customizable neural network architecture learned his gaze patterns faster than I'd ever seen, adjusting sensitivity when his head lolled to the side.

Tuesday morning ritual: oatmeal preparation. Jamie's high chair faced me as always, but today the tablet stood propped beside his juice cup. "Hungry?" I asked, holding the spoon. His eyes locked onto the Expressia screen where Benson's photo glowed beside an apple icon. Then it happened - that slight head tilt he makes when concentrating. His knuckle brushed the "Benson" tile. A synthesized voice declared "DOG!" clear as cathedral bells. My wooden spoon clattered into the oatmeal pot. Not a request - a statement. A declaration of existence. Warmth spread through my chest like spilled honey.

Later that week came the revolution. Jamie's physical therapist was coaxing him through leg exercises when he suddenly craned his neck toward the tablet across the room. With agonizing slowness, his finger extended - trembling but deliberate - tapping the "STOP" icon twice. The robotic voice echoed "NO. STOP." The therapist froze mid-count. For seventeen seconds, the only sound was rain on the roof and Jamie's ragged breathing. Then she whispered "Okay, buddy. We stop." That night I wept into Benson's fur, replaying how Expressia's hierarchical symbol encoding transformed picture sequencing into real agency. My child hadn't just communicated - he'd set a boundary.

Of course, it's not all miracles. Last Thursday the predictive text suggested "PAIN" when Jamie wanted "PAINT," leading to unnecessary panic. And the subscription cost? Let's just say I've eaten more peanut butter sandwiches than any grown woman should. But when Jamie combines "MAMA" + "HUG" without prompting during meltdowns? That's when I feel the raw power of context-aware language generation - algorithms interpreting emotional states through usage patterns. Technology as bridge builder.

Yesterday we sat watching pigeons in the park. Jamie nudged the tablet toward me, finger hovering. Tap. "MAMA." Tap. "LOOK." Tap. "BIRD." Three words. One sentence. His first complete thought handed to me like a dandelion crown. In that moment, the app disappeared - just my boy's triumphant grin flashing gap-toothed and glorious, sunlight catching the drool on his chin like diamonds. The silence didn't break that day. It transformed.

Keywords:Expressia,news,AAC technology,non-verbal communication,assistive devices