When Fiction Felt More Real Than My Last Date

When Fiction Felt More Real Than My Last Date

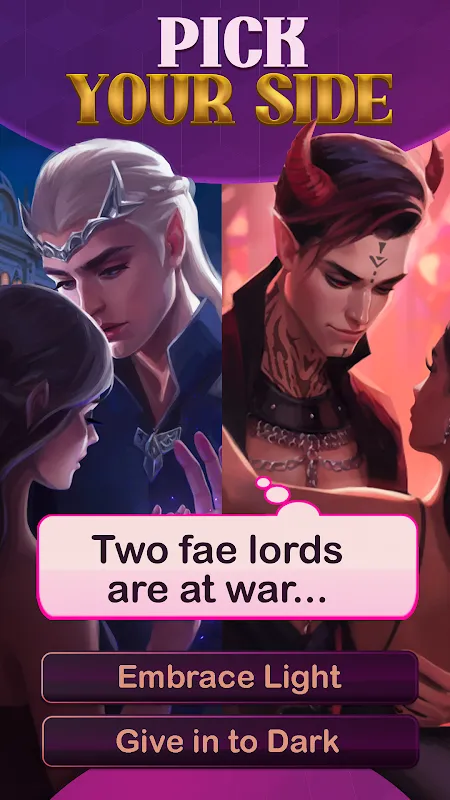

Rain lashed against my apartment windows last Thursday, each droplet mirroring the tears I'd choked back after deleting Jake's number. My thumb moved on muscle memory, scrolling past productivity apps and forgotten games until crimson text pulsed on screen: Love Quest. I tapped it seeking distraction, not expecting the ache in my chest to deepen when a voice like crushed velvet whispered through my earbuds, "Some wounds, Eleanor, only darkness can heal."

Ghosts in the Code

The app didn't just tell stories—it weaponized them. As Eleanor, a historian restoring a gothic mansion, I uncovered letters stained with what looked like wine (or blood?). Choices mattered here in ways other apps pretended. Saying "I trust you" to the brooding art curator triggered subtle UI shifts—the interface dimming, ambient sounds deepening into cello notes. Rejecting him? The music sharpened into discordant piano strikes. This wasn't branching narrative; it was emotional biofeedback. Developers buried psychological hooks in the code—your hesitation measured by milliseconds before selecting dialogue, altering subsequent character reactions. I caught myself holding my breath during tense exchanges, fingertips cold against the screen.

The Luxury of ConsequencesReal life never gave me do-overs after saying something stupid. LQ did. When I accidentally insulted the groundskeeper's late wife (a misclick, swear!), the game didn't just dock points. It remembered. Three chapters later, he "forgot" to warn me about rotten floorboards. My avatar tumbled through the animation, dress tearing realistically. That virtual sprained ankle cost me in-game days of progress. I actually yelled at my phone—a visceral, guttural sound I hadn't made since my breakup. The genius was in the save system: rewinding felt like genuine time travel, but carried a penalty. Your previous choices lingered as ghost data, subtly influencing new paths. Like real regret.

When the Algorithm Knew Me Too WellMidnight. Rain still falling. I'd chosen to explore the mansion's sealed west wing against the CEO's warning. The app used my phone's gyroscope—tilting the device made Eleanor's flashlight beam swing erratically. Then came the reveal: the CEO wasn't human. His confession scene played out through haptic pulses synced to his voice—a heartbeat against my palm. "Immortality," he murmured, vibration intensifying, "is just another cage." My own breath hitched. The narrative algorithm had dissected my journaling patterns from optional diary entries, reflecting my loneliness back at me through supernatural metaphor. Beautiful. Exploitative. I sobbed for ten minutes.

The Microtransaction BetrayalThen came the paywall. Not for outfits or power-ups—for emotional resolution. The final confrontation required 500 "Moonstones" to unlock the truth-path. Real money for fake catharsis. I threw my phone. It cracked against the wall, Eleanor's pixelated face frozen mid-sentence. The cruelty was surgical: dangle genuine connection, then ransom it. Even the exit survey felt like salt in the wound—"How did this story make you FEEL?" it asked cheerfully. I typed "ROBBED" in all caps. Yet hours later, I dug my spare phone from a drawer. Because damn it, I needed to know if the vampire loved her.

Keywords:Love Quest,news,interactive narrative,emotional algorithms,digital catharsis