When My Heart Hammered at 3 AM

When My Heart Hammered at 3 AM

Blood pounded in my ears like war drums as I clutched my chest, back pressed against cold bathroom tiles. Sweat glued my t-shirt to skin still smelling of burnt coffee and stale deadlines. That third consecutive all-nighter coding had snapped something primal—a tremor in my left arm, dizziness swallowing the pixel-lit room. My Apple Watch screamed 178 BPM while I mentally drafted goodbye texts. This wasn’t burnout; it felt like obituary material.

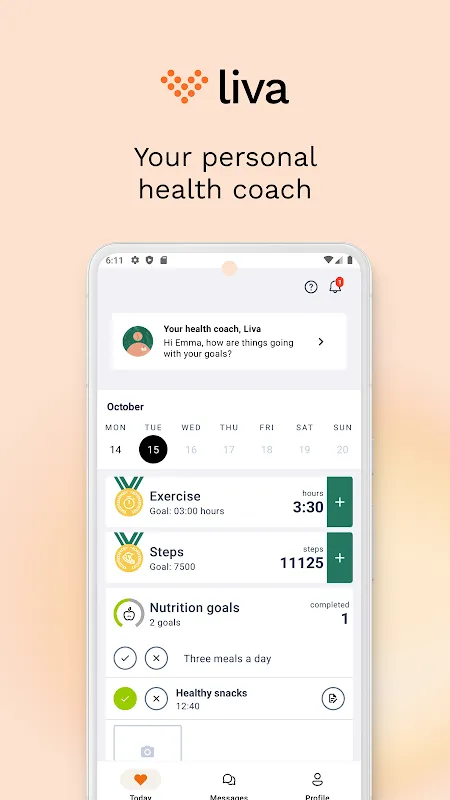

Enter Liva—not through some app store epiphany, but as a trembling thumb-stab at my emergency contacts list. I’d installed it months prior during a "wellness phase," burying it between cryptocurrency trackers and food delivery apps. When its interface bloomed onscreen amidst the panic, I expected robotic CPR instructions. Instead, it asked: "Describe the fear, not just the symptoms." No dropdown menus. No "select pain level." Just a blinking cursor in a text field softer than ER fluorescents.

Code Red CompassionI vomited fragments into that box—"chest vise," "screen blur," "regretting every Red Bull since 2019." Within 90 seconds, Liva cross-referenced my wearables’ biometric freefall with lexical analysis of my ramble. Its response wasn’t ER coordinates (though it offered them). It prescribed: "Sit. Breathe through your nose for :07. Exhale through mouth for :11. Repeat until you read this." Below, a looping animation of expanding/collapsing lungs synced to my watch’s haptic pulse. The genius? It hijacked my hyperfocus. Counting breaths became debugging a breathing algorithm—distracting me from dying long enough to stop feeling like I was.

Later, I’d learn about the NLP neural nets parsing emotional context from phrases like "chest vise" versus "stabbing pain." How it weights user vocabulary heavier than generic symptom databases. But in that moment? It was pure witchcraft. My tremors eased around breath #9. By #15, the watch showed 110 BPM. All without sirens.

The Grocery Store GambitRecovery demanded humility. Like standing in Whole Foods paralyzed by kale. Liva’s "Adaptive Scaffolding" feature—a term I’d normally eye-roll—manifested as a real-time game. My camera scanned avocados while overlaying green circles: "Choose 2 healthy fats." Red brackets around processed snacks whispered: "Temptation tax: 22 mins extra cardio." Brutal? Absolutely. Effective? I left with salmon and shame instead of frozen pizza. The app’s secret sauce? Machine learning that mapped my past 147 food logs against biometric reactions. That "tax" wasn’t arbitrary—it calculated exact movement penalties based on my personal metabolic drag.

Yet for all its brilliance, Liva infuriated me weekly. Its sleep module once scolded: "Poor regeneration risk" after 4.5 hours. I snapped back via voice note: "Deadlines don’t care about REM cycles!" The reply? A clipped "Understood" and—cruelly—no further nudges. I missed its nagging. Turns out, its behavioral AI flags user irritation thresholds, silencing prompts to avoid disengagement. A smart feature that felt like abandonment.

Then came the protein powder incident. Scanning a supplement, Liva flashed "Lab test inconsistency detected" with links to third-party purity reports. The brand I’d trusted for years showed trace heavy metals. I raged—not at the app, but at the industry. That’s Liva’s power: it weaponizes data into visceral revelations. No wellness platitudes. Just cold, lifesaving facts wrapped in code.

Now? My watch buzzes not with panic, but with gentle pulses—Liva’s "hydration nudge" as I code. My bathroom’s a panic-free zone. And that 3 AM terror? It lingers… but so does the ghost of a blinking cursor asking me to name it.

Keywords:Liva,news,AI health coach,biometric NLP,adaptive scaffolding