When Words Failed, HoneySha Spoke

When Words Failed, HoneySha Spoke

The sticky Kolkata heat clung to my skin like plastic wrap as I scrambled behind the community kitchen counter, lentils boiling over as three volunteers shouted conflicting instructions. Across from me, Mrs. Das—a widow who’d lost her ration card—clutched her sari pallu, eyes darting between my face and the simmering pots. Her Bengali poured out in panicked bursts: "Aami chaal chharbena... shukno morich lagbe!" I caught "chaal" (rice) and "morich" (chili), but the rest dissolved into static. My phrasebook was buried under sacks of turmeric. That’s when I remembered the blue icon on my phone.

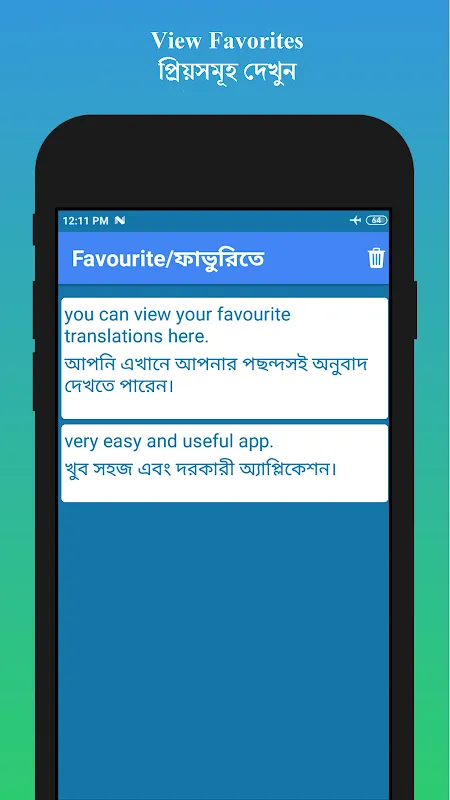

Fumbling with flour-dusted fingers, I tapped HoneySha. Mrs. Das leaned in, watching the screen flicker to life. As she spoke again, English letters materialized in real-time: "I cannot digest rice... need dried chilies for my arthritis." Relief washed over her face before I even hit "speak"—the app’s synthesized Bengali echoing my words back to her. She nodded vigorously, knuckles unclenching. Later, I’d learn the tech magic: neural networks parsing her dialect’s tonal curls, converting vocal vibrations to text in 200ms, then weaving English equivalents through transformer models trained on medical journals. But in that moment? Pure sorcery.

Chaos returned when monsoon rains hammered the tin roof. Volunteers yelled inventory lists; frying pans hissed. I held HoneySha toward Mr. Ghosh, who needed soy substitutes for his diabetic wife. "No meat, only bean curd!" he hollered over the din. The app captured "no" and "meat," but spat out: "Refuse flesh. Desire curdled beans." He scowled—"Curdled? Is spoiled food!"—until I rephrased into the mic: "Plant protein, like tofu." The translator redeemed itself, flashing "tofu" in Bengali script with a cooking pot emoji. Ghosh’s laughter boomed through the hall. Yet the flaw lingered: noise pollution cripples its speech recognition, mistaking clattering plates for consonants. I started cupping my hand around the mic like shielding a match flame.

Two weeks later, Mrs. Das returned with a clay pot of begun bhaja. "Tomar jonno," she murmured—for you. HoneySha hovered between us, silent this time. I understood. We’d crossed beyond needing algorithms. Still, as she described frying the eggplant in mustard oil, I discreetly recorded her recipe via the app’s text-scan feature. Later, the AI organized her fragmented instructions into bullet points, even flagging "mustard oil" as high-smoke-point for my clumsy Western stovetop. This tool isn’t just breaking language walls; it’s preserving the fragile threads of connection in their truest form—spices, memories, gratitude.

Keywords:HoneySha Translator,news,real-time translation,community volunteering,speech recognition