When My Voice Replaced the Pen

When My Voice Replaced the Pen

It was 3 AM when my trembling fingers finally unclenched from the mouse. Twelve hours deep into emergency shifts, the glow of the EMR screen burned ghost trails across my vision. Each click felt like dragging concrete blocks – documenting a dislocated shoulder had just consumed 37 minutes of my rapidly decaying sanity. That’s when the resident beside me slammed his laptop shut. "Try dictating," he muttered, nodding at my cracked phone. "Just talk to it like a drunk med student."

The Whisper Test

Next morning, ICU rounds. Between ventilator alarms and rattling medication carts, I thumbed open the app. "57-year-old male, STEMI s/p PCI," I mumbled into the chaos. Before I could blink, the screen populated with crisp text: precise cardiac terminology auto-capitalized, medications bulleted. My scribbled shorthand looked primitive against this real-time transcript. When I said "tachycardia," it didn’t confuse it with "tacos" – a miracle considering my post-call slurring.

But Thursday broke the spell. Code blue in Bay 4. Amid compressions and epi vials, I barked into my phone: "Non-responsive, pupils fixed –" The screen flashed: "Non-responsible pupils faxed." My stomach dropped. Later, I learned the ER’s concrete walls devoured high frequencies. The app’s adaptive noise filtering had thresholds, not magic. I spent 20 minutes manually correcting, cursing the false promise of seamless tech.

Ghosts in the MachineThat failure haunted me. Next admission, I tested its limits. Leaning against a supply closet, I whispered: "Neuro: denies photophobia but reports phonophobia secondary to..." I paused deliberately. "...husband’s banjo practice." The sentence structured itself perfectly – subordinate clauses intact, medical jargon prioritized over marital complaints. Behind that simplicity? Layers of contextual algorithms parsing syntax hierarchies before I’d finished exhaling.

Yet the friction points gnawed. Customizing templates felt like teaching Latin to a toddler. When I created a rheumatology assessment shortcut, the app demanded 14 validation steps. During a lupus flare consult, I triggered the template only to watch it regurgitate an oncology workup. "Malignant?" my patient gasped at the screen. I wanted to hurl the phone through the stained-glass window of the chapel across the hall.

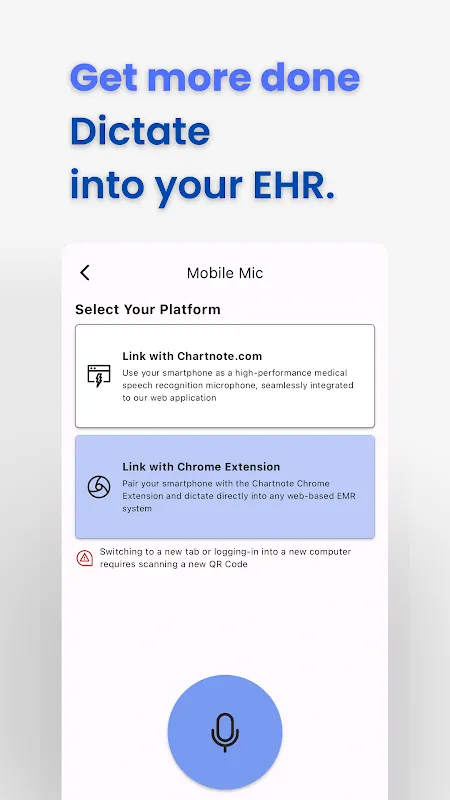

Integration became my obsession. Syncing to Epic required navigating Byzantine security protocols that made HIPAA feel like a spy thriller. For three nights, I sacrificed sleep decrypting OAuth tokens instead of case studies. The victory moment – seeing my verbal ROS populate directly into patient charts – sparked a rush rivaling my first successful intubation. That’s when I understood: this wasn’t just transcription. It was cognitive offloading, freeing neurons for diagnostic puzzles instead of clerical gymnastics.

Echoes in Empty HallwaysLast month, treating aphasia patients changed everything. Mr. Petrov could only utter fragmented nouns. "Apple... train... headache." As I dictated observations, he pointed at my phone, eyes wide. I handed it over. "Red... round... sweet," he rasped. The screen responded: "Patient describes apple." His laughter cracked the silence – our first unbroken communication in weeks. In that instant, the tech ceased being a tool. It became a bridge.

Tonight, walking past the nursing station, I hear my own exhaustion echoed in colleagues’ voices. "Chartnote," someone groans, "transcribed ‘palpable panic’ as ‘palatable panic’." We laugh, but the resentment’s thin. Because when I clock out 90 minutes early thanks to verbal charting, I know what I’ll reclaim: sunlight on my drive home, dinner before midnight, the weightlessness of unused prescription pads in my pocket. The documentation beast remains, but now we fight it with our throats instead of our tendons.

Keywords:Chartnote Mobile,news,voice recognition,clinical workflow,medical documentation