Zenduty: My Midnight Guardian

Zenduty: My Midnight Guardian

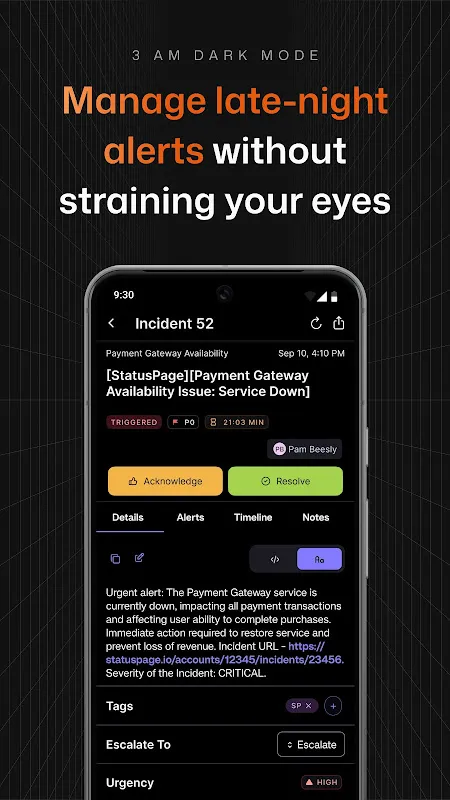

That visceral punch to the gut when Slack explodes at 2:47 AM - I know it too well. My fingers trembled against the cold aluminum laptop casing as our monitoring dashboard hemorrhaged crimson alerts. Our entire authentication cluster had flatlined during peak European traffic, and I was drowning in fragmented PagerDuty notifications. Then Zenduty seized control like a digital conductor. Within seconds, it transformed 87 disjointed alerts into a single contextualized incident, automatically triggering our failover runbook while simultaneously blitzing the on-call squad via SMS, Slack DMs, and even a brutal rotary phone call that nearly gave Dave a heart attack.

I remember the acidic taste of panic as database latency spiked to 9 seconds. Before Zenduty, this would've been a four-hour blame-storming session. Instead, its noise-reduction algorithms silenced irrelevant cloudwatch alarms while dynamically escalating to our lead SRE based on service criticality tags. The platform even pulled Grafana snapshots into the war room channel automatically - no more frantic screenshot scrambling while customers rage-tweeted.

What truly shattered me was watching the postmortem auto-generate. As we sipped tepid coffee at dawn, Zenduty had already timeline-stamped every action, from Maria's Kubernetes rollback to the moment AWS's network flaps triggered the cascade. That forensic precision used to take us days of log diving. Now? Automated incident timelines became our accountability lifeline, exposing our blind spots with surgical cruelty. I both loved and resented how it held up a mirror to our architectural debt.

The magic lives in its API-first guts. When we integrated Zenduty's webhooks with Terraform Cloud, it started auto-pausing deployments during active incidents - no more "fix-forward" disasters. But Christ, configuring those event rules felt like brain surgery. I spent three solid weekends wrestling with its YAML-based policy engine, cursing its stubborn refusal to recognize our custom severity matrix. Worth every grey hair though, when it intercepted that cascading Redis failure last quarter before our checkout page even stuttered.

Waking up to a resolved incident notification still gives me an illicit thrill. Last Tuesday's Kafka meltdown got contained before my alarm clock rang. I rolled over in the dark, phone glowing with Zenduty's all-clear - no adrenaline spike, no dry mouth. Just the soft hum of the AC and my wife's steady breathing beside me. That silent victory tastes better than any coffee.

Keywords:Zenduty,news,incident management,DevOps automation,on-call response